Final Year Dissertation Project

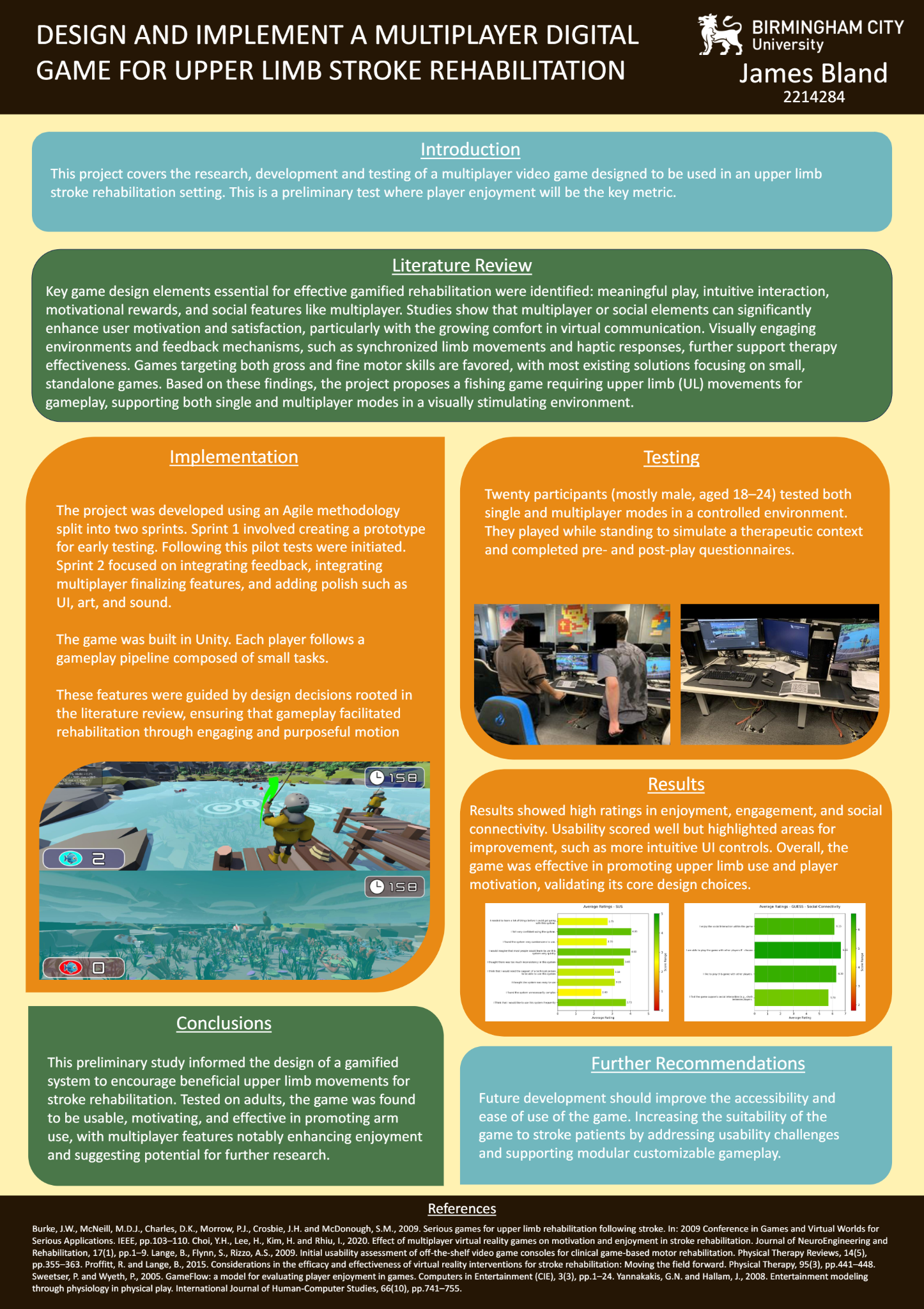

Over the course of approximately seven months, I designed, implemented, and tested a multiplayer game aimed at encouraging players to perform movements identified as beneficial for stroke rehabilitation. The project focused on increasing patient motivation and engagement compared to conventional rehabilitation methods through interactive and goal-oriented gameplay.

The project was entered into the Unity for Humanity Grant and was recognised as an Honourable Mention, as highlighted in the official Unity blog post announcing the winners.

Throughout development, I applied a broad range of skills developed during my Games Technology course, including:

Graphics Programming

Implemented real-time water ripple effects to enhance visual feedback and immersion.

Gameplay & Systems Programming

Designed and implemented core gameplay systems tailored to rehabilitation-focused player interactions.

AI Implementation

Developed AI behaviours including wandering logic and finite state machines to control non-player entities.

Audio Implementation

Integrated FMOD for adaptive and responsive audio feedback.

Tools Programming

Created custom development tools to streamline iteration and testing.

Third-Party Integration

Utilised, adapted and integrated a third-party open-source API (etee controller input api) to extend functionality and support multiplayer features.

Knightly Knockout

Knightly Knockout is a networked multiplayer sword-fighting game built using full-stack web technologies. The game allows players to engage in real-time combat with physics-driven sword interactions in a 3D environment.

3D Graphics & Physics: Built using Three.js for rendering and ammo.js for physics simulation, enabling realistic sword collisions and character movement.

Networking: Implemented real-time multiplayer functionality using Socket.io and Node.js, handling player input, synchronization, and game state across clients.

Backend & Database: Developed the server with Express.js, Node.js, and MySQL, including secure player data storage and game session management.

File & Asset Handling: Used Multer for file uploads and fs/path modules for server-side asset management.

Security & Utilities: Integrated crypto for secure operations and CORS for cross-origin resource sharing.

Additional technologies used include HTML, CSS, JavaScript, npm, and Nodemon for development workflow and project management.

A short video demonstrating gameplay is available on my YouTube channel https://www.youtube.com/@james...

Jett Engine

Jett Engine is a custom game engine written in C++, leveraging OpenGL for GPU-accelerated graphics rendering. The project provided an opportunity to apply advanced C++ concepts, clean code principles, and software design patterns in a graphics-intensive environment.

The engine implements a range of modern graphics techniques, including:

Shaders & Rendering

Developed GLSL vertex and fragment shaders for dynamic effects, including ocean wave simulation, skybox reflections, and Blinn-Phong shading.

Implemented post-processing effects to enhance visual quality.

Graphics Optimisations

Utilised instancing to efficiently render multiple objects with minimal draw calls.

Advanced Scene Features

Procedural ocean waves driven by a vertex shader.

Realistic reflections and lighting effects for immersive environments.

This project strengthened my understanding of GPU programming, low-level graphics rendering, and real-time performance optimisation while reinforcing clean architecture and modular engine design.

Unidentified Fossil Organizer

Unidentified Fossil Organizer is a collaborative project developed by a multidisciplinary team, including myself, another Games Technology student, and two audio students. The game was originally created for the client Think Tank Birmingham over approximately 12 weeks, focusing on core gameplay and mechanics.

Following the initial build, the team spent an additional 12 weeks remastering all audio to professional quality, incorporating more complex sound design to enhance player experience and game identity. During this phase, the audio team experimented with features that allowed for advanced FMOD integration, professional recording, and editing techniques.

Collaborated with the team to integrate audio systems into gameplay using FMOD, ensuring dynamic and responsive sound implementation.

Assisted in implementing features that allowed gameplay to interact with audio events for a more immersive experience.

Worked with professional audio students to synchronize gameplay mechanics with high-quality sound assets.

FMOD for audio implementation

Professional recording and editing software/hardware

Game development using Unity

This project strengthened my ability to work in a multidisciplinary team, integrate new technologies into a project, and leverage audio to enhance game identity and player engagement.

Through using sound to experiment we added the following features to the game

This project was developed as part of the AI in Games module and provided practical experience in designing and implementing a range of artificial intelligence systems commonly used in games. The focus of the project was on agent decision-making, pathfinding, and scalable AI architectures, with an emphasis on modularity, extensibility, and tooling.

A core component of the project is a custom finite state machine (FSM) framework, designed to control agent behaviour in a clear and maintainable way. To support rapid iteration and usability, the system is accompanied by a bespoke Unity editor window, allowing finite state machines to be visually created, edited, and debugged directly within the editor.

This approach significantly improved workflow efficiency and enabled complex behaviours to be authored without modifying runtime code. The FSM system was used to drive in-game agent logic, demonstrating reactive state transitions and behaviour changes in response to environmental conditions.

The project includes the implementation of several pathfinding algorithms, designed using the Strategy design pattern. Each algorithm is encapsulated within a ScriptableObject, allowing a collection of interchangeable pathfinding strategies to exist within the project’s asset folder.

This architecture enables pathfinding behaviour to be swapped at runtime, either via the Unity Inspector or through code, without requiring changes to the pathfinding agent itself. This design encourages experimentation, comparison of algorithms, and reuse across different agents and scenarios.

A boids-based flocking system was implemented to explore group movement and emergent behaviour. Two versions of the system were created:

A single-threaded implementation for clarity and ease of debugging

A multithreaded implementation utilising the Unity Job System to improve performance and scalability

This comparison highlights the benefits of data-oriented design and parallel processing when working with large numbers of agents.

These systems were integrated into a small interactive scenario featuring a mouse character controlled by a finite state machine. The agent exhibits distinct behaviours and dynamically reacts to global changes in its environment, demonstrating the interaction between decision-making logic and environmental stimuli.

The module also introduced Behaviour Trees and Goal-Oriented Action Planning (GOAP) as alternative approaches to agent decision-making. While these systems were explored at an introductory level, they highlighted the strengths and limitations of different AI architectures. Further exploration of these techniques is planned to support more scalable and expressive AI behaviour in future projects.

Throughout development, emphasis was placed on clean code architecture and reusability. Systems were designed to be modular and decoupled, allowing individual components—such as decision-making logic or pathfinding algorithms—to be extended or replaced with minimal impact on the wider codebase.

The use of ScriptableObjects, editor tooling, and design patterns reflects a production-oriented approach to AI development rather than a one-off prototype.

Buzzard is a mobile game published on the Google Play Store. This project provided valuable experience in developing, testing, and deploying a game specifically for mobile platforms. Throughout development, key considerations such as UI scalability, performance, and mobile-friendly control schemes were prioritized to ensure the game was well suited to its target platform.

The game leverages several hardware features unique to mobile devices:

Microphone Integration

The device microphone is used in combination with a Hugging Face API to create interactive cinematic moments where players must verbally respond to in-game characters to progress. The microphone is also used to trigger custom audio playback when the player’s ship is destroyed.

Gyroscope Controls

The phone’s gyroscope allows players to control the pitch and roll of the spaceship by physically tilting the device. This control scheme can be disabled in the settings menu, allowing players to switch to on-screen virtual joysticks instead.

Haptic Feedback

Vibration is used to provide tactile feedback when enemy ships are defeated. The vibration strength is dynamically adjusted based on the distance between the player and the enemy, enhancing immersion.

The project integrates several third-party frameworks and services:

Google Play Games Services

Google account login

Persistent local and cloud-based data saving

Achievement system

Cinemachine

Dynamic camera behaviour

Support for both first-person and third-person perspectives

Unity Cloud Services

In-game advertisements

Addressables system to reduce initial install size and improve download efficiency

During development, I regularly refactored code to maintain clarity and scalability. The project is organised into small, atomic classes, which significantly improved development speed and enabled the addition of more complex features.

A particular highlight is the ship customisation system, which makes extensive use of abstract classes, inheritance, and abstract/virtual methods. This design allows new ship components and behaviours to be added with minimal changes to existing code, demonstrating strong object-oriented programming principles.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua.

Necrosis is a console-targeted game developed for PlayStation, created and tested using PlayStation-specific APIs and devkits. The project was developed by a team of three over the course of a semester (approximately twelve weeks) and required close collaboration, platform-specific testing, and performance awareness.

My primary contribution to the project was the design and implementation of the drone mechanic. Throughout the level, players can interact with in-world terminals to gain control of a remotely operated drone. Once deployed, the drone can be piloted across the map to locate and sabotage the opposing player, culminating in a timed detonation.

This mechanic introduces an asymmetric layer to gameplay, shifting the player temporarily from direct confrontation to tactical disruption and positioning.

The drone’s visual presentation was heavily inspired by the Dune films and games, particularly their use of abstract, high-contrast surveillance imagery. To achieve a similar aesthetic, I implemented a custom renderer feature using Unity’s Scriptable Render Pipeline (URP).

The final visual effect is composed of multiple render passes, each with a clearly defined responsibility:

Skybox Tint & Static Overlay

Applies colour grading to the skybox and overlays a subtle static effect to reinforce the drone’s surveillance perspective.

Scene Outlining (Difference of Gaussians)

Uses a Difference of Gaussians technique to generate a high-contrast, black-and-white outlined version of the scene, enhancing readability and stylisation.

Character Highlighting (Fresnel Shader)

Applies a Fresnel-based shader to characters, causing them to glow when viewed through the drone feed and ensuring clear visual separation from the environment.

Together, these passes combine to create a distinct visual mode that communicates both gameplay function and narrative tone.

In addition to the drone mechanic, I implemented the game’s inventory system. The system makes use of asynchronous programming to handle delayed responses to player input, allowing inventory actions to resolve after a variable amount of time without blocking gameplay.

This approach ensured responsive user interaction while supporting mechanics such as timed item usage and delayed effects.

Working as part of a small team required clear division of responsibilities and frequent iteration. My work focused on gameplay systems, rendering features, and technical problem-solving, while maintaining compatibility with console hardware constraints and team workflows.

Freight is my first game developed using Unreal Engine, created as part of the 3D Games Programming and Game Asset Pipeline modules at university. The project was completed over approximately ten weeks and provided hands-on experience with Unreal Engine’s gameplay framework, asset workflows, and real-time rendering tools.

The game is set in a post-apocalyptic world where a group of survivors travel across a devastated landscape aboard an old freight train. Players take on the role of a “runner,” jumping off the moving train at points of interest to scavenge resources for the group before rejoining the convoy.

The player is equipped with two weapons—a revolver and a sword—which can be swapped between at runtime. Combat is designed to encourage weapon chaining, allowing players to combine ranged and melee attacks into fluid combos. This system was intended to promote aggressive, expressive play rather than isolated weapon usage.

As my first Unreal Engine project, Freight was a significant learning experience. It required adapting to Unreal’s component-based architecture, Blueprint scripting, and asset pipeline, while also managing scope within a fixed development timeframe.

One of the most successful outcomes of the project was the world-building and visual presentation. The environments, lighting, and overall atmosphere effectively communicate the game’s tone and setting, helping to establish a strong sense of place.

The development process and technical decisions behind the project are documented in one of my most successful YouTube videos, The Making of Freight, which showcases the project’s evolution and provides insight into my workflow.

While the visual design and world-building are strong, there are clear opportunities to improve the gameplay feel and feedback. Enhancements such as:

Improved particle effects for combat impacts

Screen shake and camera feedback during attacks

More responsive audio cues and hit reactions

would significantly increase the sense of weight and responsiveness with relatively small changes. Revisiting these elements would elevate the combat system and better align the game feel with the visual quality of the world.

Additional smaller projects such as game jam projects as part of small friendly game Jams, larger game jams (such as ggj) and personal projects can be found on my itch io page https://averagealtodriver.itch... as well as other module projects such as a triangle rasterizer can be found on my github https://github.com/JamesB0010